I’ve been thinking all week about a movie I saw on Monday night at the recommendation of my friend Clay Hebert and I still haven’t fully reconciled what I saw, heard and how to interpret the constant thoughts it’s generated.

I’ve been thinking all week about a movie I saw on Monday night at the recommendation of my friend Clay Hebert and I still haven’t fully reconciled what I saw, heard and how to interpret the constant thoughts it’s generated.

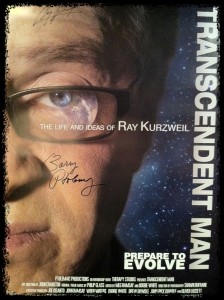

Transcendent Man is a documentary directed by Barry Ptolemy chronicling “the life and controversial ideas of luminary Ray Kurzweil,” a man who wrote his first computer program at age 15 (in 1963) and built a computer in 1965 that put him on a CBS TV show.

It’s hard to question Kurzweil’s credentials: they’re profound. Â He’s been inventing technology from flatbed scanners to music synthesizers for decades. Â But it’s his predictions, first brought forth in Kurweil’s book:Â The Singularity is Near: When Humans Transcend Biology, that form the foundation of this film. Â Says the official movie Web site: “Kurzweil predicts that with the ever-accelerating rate of technological change, humanity is fast approaching an era in which our intelligence will become increasingly non-biological and millions of times more powerful. This will be the dawning of a new civilization enabling us to transcend our biological limitations. In Kurzweil’s post-biological world, boundaries blur between human and machine, real and virtual. Human aging and illness are reversed, world hunger and poverty are solved, and we cure death.”

He is talking about a world that is more like The Terminator films than our own. Â A world where humans are responsible for building the smartest machines ever imagined, ones that are smarter than humans and ones that actually blend biology and technology well beyond today’s limits.

What I’ve been struggling with isn’t so much the “can this really happen” question because I believe it can (though I’m not so sure about the curing death thing). Â The question that hit me like a bucket of ice water over the head is “can this really happen as fast as Kurzweil says it will? Â He talks of the exponential growth of technology and believes what we’ve seen in the past will easily apply to these futuristic machines. Â For example:

- Music: look how long it took to move away from records…but once we did, 8-tracks became cassette players which became CDs which became digital music.

- Digital storage: I’m too young to remember a computer that held data on a tape reel. Â My first PC in the mid-80s had a 5 1/4″ floppy drive. Â Look at the speed with which those drives became 3 1/2″ ones and then how quickly those disappeared in favor of disks…and now some companies like Apple are starting to eliminate a DVD drive in many of their machines. The DVD on a PC or even in your home (streaming media from Amazon, Netflix, etc.) Â is doomed.

- Cell phone: I remember (again in the 80s) when I saw the first cell phone. The mechanic at the garage my dad took our cars to had one. Â It was big, bulky and in a bag. And it cost thousands of dollars. Â I still remember my initial reaction: sure this would be great for people like paramedics (I was a huge Rescue 51 fan) but not only was it totally unaffordable for the average consumer, why would you need to make a non emergency phone call when you were on the move? Â How quickly that technology has changed…phones are now as small as a credit card.

Whether you agree with Kurzweil or not, the fact is that if you use technology today…and have been at all impacted by how technology has changed for you within your lifetime, you should find a way to catch this flick. Â It’ll keep you up at night wondering about the possibilities of what the future brings.

#

This is real awesome! Thanks for sharing these useful articles!

#

Last evening I quckily read this wonderful book on the Kindle of a visiting friend. I am blown away by its assertion that neocortex architecture largely consists of 100-neuron modules which act as primitive general-purpose pattern-recognition atoms and link with peers in hierarchies to create the mind! But what is the functional significance of the 60,000-neuron cortical columns? And what a pity that the chart on the transistor count of microprocessors makes numerous (and obvious) errors in transcribing Intel’s cited source. Is it the only chart with mistakes? Is there a Web page for errata?